Introduction: The End of an Era

For the past fifteen years, “cloud-first” has been the unchallenged mantra of technology infrastructure strategy. Organizations large and small migrated workloads to centralized data centers operated by hyperscale providers, consolidating computational power in massive facilities scattered across the globe. This paradigm delivered unprecedented scalability, reduced capital expenditure, and democratized access to enterprise-grade infrastructure.

But a fundamental shift is now underway—one that challenges the very foundations of cloud-first thinking.

We are witnessing the emergence of edge-first architecture. This distributed computing paradigm positions processing power not in distant mega-facilities, but at thousands of micro-locations positioned at the network’s edge, as close as possible to end users and connected devices.

This isn’t simply an incremental evolution. It’s a complete architectural transformation that changes how we think about infrastructure planning, deployment, security, and scale.

Understanding the Edge-First Paradigm

What Is Edge Computing?

Edge computing refers to the practice of processing data at or near the source of data generation, rather than sending it to centralized cloud data centers potentially hundreds or thousands of miles away. Edge nodes—ranging from small compute appliances in retail stores to micro data centers in telecommunications facilities—perform local processing, analytics, and decision-making.

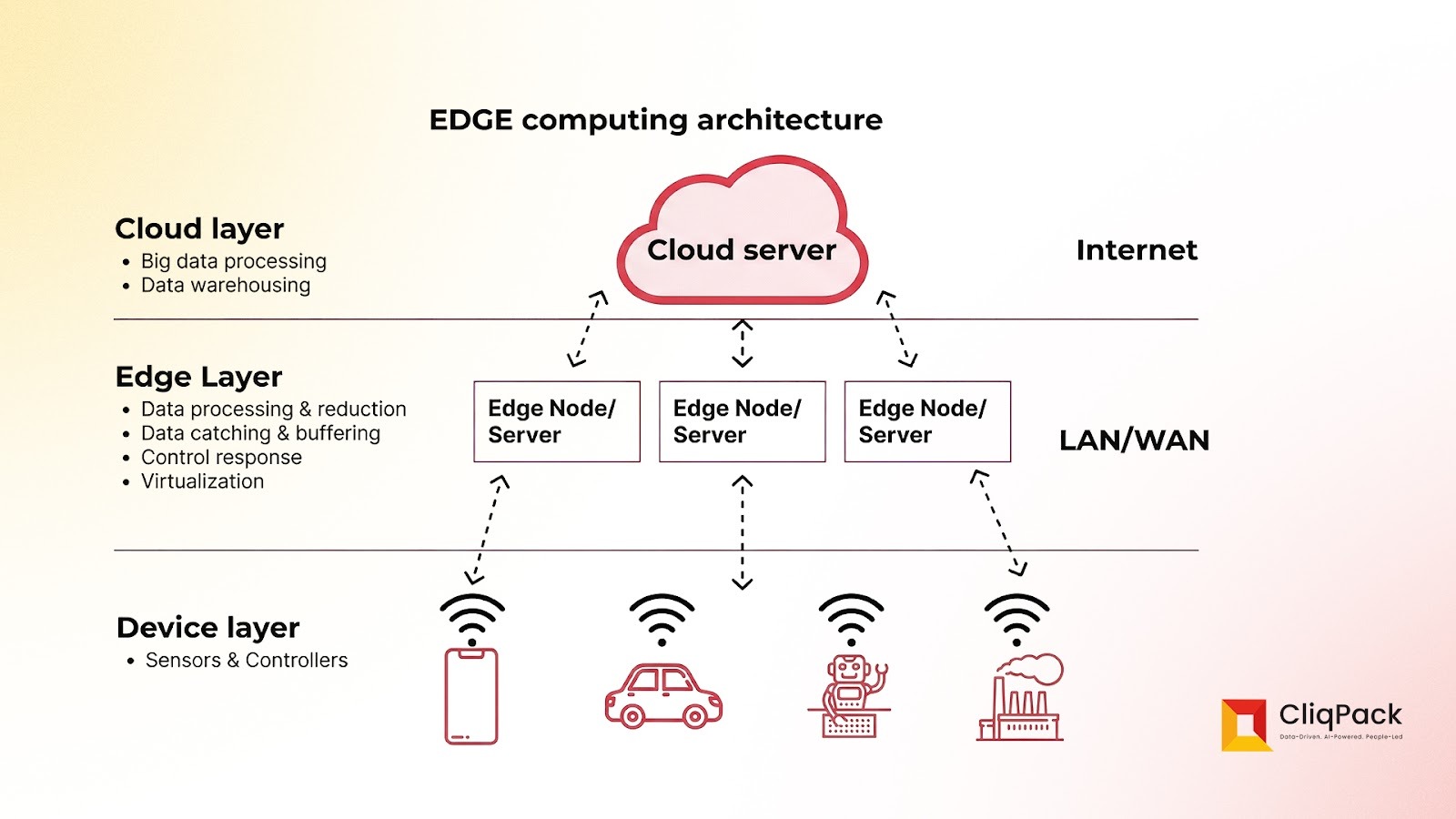

The cloud layer handles big data processing via cloud servers connected over the internet. The edge layer includes edge nodes/servers for data ingestion, buffering, virtualization, and caching, linked via LAN/WAN. Device layers consist of sensors, controllers, phones, cars, and factories sending data upward.

This architecture reduces latency by processing data closer to sources, minimizing cloud dependency for real-time tasks like IoT monitoring.

The Fundamental Architectural Difference

Cloud-First Architecture:

- Centralized processing in large data centers

- Data travels long distances for computation

- Dozens of massive facilities serve global demand

- Vertical scaling within mega-facilities

- Centralized security and management

Edge-First Architecture:

- Distributed processing across thousands of locations

- Computation happens where data is generated

- Micro-locations positioned near users and devices

- Horizontal scaling across distributed nodes

- Decentralized security boundaries

The shift from cloud-first to edge-first represents a move from centralization to intelligent distribution.

The Latency Imperative

Why Milliseconds Matter

The single most compelling driver of edge-first architecture is latency—the time delay between initiating an action and receiving a response.

Traditional cloud computing typically involves latency of 100-300 milliseconds or more, depending on geographic distance and network conditions. For many applications, this is perfectly acceptable. But for an emerging class of use cases, this delay is unacceptable—even dangerous.

Edge-first architecture reduces latency to single-digit milliseconds, enabling entirely new categories of applications and experiences.

Real-Time Processing Use Cases

Autonomous Vehicles: A self-driving car traveling at 60 mph covers 88 feet per second. A 200-millisecond delay means the vehicle travels nearly 18 feet before receiving a response from a cloud server. For critical safety decisions—detecting a pedestrian stepping into the road, responding to sudden braking by another vehicle—this delay could be catastrophic.

Edge processing enables autonomous systems to make split-second decisions locally, with only non-critical data and long-term learning being synchronized to central facilities.

Industrial Automation: Modern manufacturing relies on robotics and automated systems that must coordinate movements with precision timing. A robotic arm assembling electronics components, a packaging line running at high speed, or a chemical processing system maintaining precise temperatures—all require feedback loops measured in single-digit milliseconds.

Cloud latency makes these operations impossible. Edge computing makes them routine.

Interactive AI Applications: The next generation of AI assistants, augmented reality experiences, and interactive systems demand responsiveness that feels natural and immediate. Users perceive delays above 100 milliseconds as “laggy” or unresponsive. To create AI experiences that feel fluid and natural—whether that’s real-time language translation, interactive virtual assistants, or AR overlays on live video—edge processing is essential.

The Infrastructure Transformation

From Mega-Facilities to Micro-Locations

Cloud-first architecture consolidated computing into a relatively small number of massive data centers. AWS operates approximately 30 availability zones globally. Microsoft Azure has around 60 regions. These facilities are enormous—often hundreds of thousands of square feet, housing tens of thousands of servers.

Edge-first architecture inverts this model entirely. Instead of dozens of mega-facilities, edge computing requires thousands—potentially millions—of micro-locations. These edge nodes might be:

- Small compute appliances installed in cell towers

- Micro data centers in retail locations

- Edge servers in hospital facilities

- Computing infrastructure in vehicles themselves

- Dedicated hardware in industrial facilities

Each location has far less computational power than a hyperscale data center, but collectively they provide massive distributed capacity positioned exactly where it’s needed.

Data Synchronization Challenges

In cloud-first architecture, data naturally consolidates in centralized databases. Synchronization is relatively straightforward—applications write to central data stores, and all nodes access the same source of truth.

Edge-first architecture creates fundamental data synchronization challenges:

- Distributed State Management: How do thousands of edge nodes maintain consistent state?

- Conflict Resolution: When multiple nodes update the same data, which version wins?

- Bandwidth Optimization: Constantly synchronizing all data between edge and cloud would overwhelm networks

- Eventual Consistency: Edge systems must operate effectively even when temporarily disconnected from central facilities

These challenges require sophisticated distributed database technologies, conflict-free replicated data types (CRDTs), and intelligent caching strategies that didn’t exist in traditional cloud architectures.

Security Boundary Redefinition

Cloud-first security models rely on strong perimeter defenses around centralized data centers. Security teams can focus protective measures on a limited number of facilities with controlled physical and network access.

Edge-first architecture explodes this model. Thousands of edge locations create thousands of potential attack surfaces. Many edge nodes exist in physically unsecured environments—retail stores, street-level installations, vehicles.

This requires fundamentally different security approaches:

- Zero Trust Architecture: Assume no location is inherently trusted; verify every transaction

- Local Encryption: Data must be encrypted both in transit and at rest at every edge location

- Autonomous Security: Edge nodes must detect and respond to threats without waiting for centralized direction

- Minimal Privilege: Each edge node should have access only to data strictly necessary for its function

Security in edge-first architecture must be designed into the system from the ground up, not added as a perimeter defense.

Deployment and Operations Complexity

Deploying and managing thousands of distributed edge nodes creates operational challenges that cloud-first organizations never faced:

- Automated Deployment: Manual configuration of thousands of locations is impossible

- Remote Management: Edge nodes must be configurable, updatable, and monitorable from central operations

- Health Monitoring: Detecting and responding to failures across distributed infrastructure

- Capacity Planning: Predicting and provisioning compute capacity across diverse micro-locations

These challenges are driving investment in infrastructure-as-code, Kubernetes at the edge, and AI-powered operations management that can handle the complexity of truly distributed systems.

The Hybrid Reality

Edge and Cloud Are Complementary

It’s crucial to understand that edge-first architecture doesn’t eliminate the cloud—it redefines its role.

Edge computing is ideal for:

- Real-time processing requiring low latency

- Local decision-making and immediate responses

- Reducing bandwidth by processing data locally

- Operating during network disruptions

Cloud computing remains optimal for:

- Long-term data storage and analytics

- Machine learning model training (as opposed to inference)

- Aggregating data from thousands of edge locations

- Applications where latency isn’t critical

The future isn’t “edge or cloud”—it’s intelligent distribution of workloads across a computing continuum from edge to cloud based on the specific requirements of each application.

Industry-Specific Implications

Healthcare

Hospitals and clinics are deploying edge infrastructure to enable:

- Real-time patient monitoring with instant alerts

- Surgical robots requiring zero-latency responsiveness

- Medical imaging analysis at the point of care

- Privacy-preserving local processing of sensitive health data

Retail

Retailers are leveraging edge computing for:

- In-store computer vision for inventory management

- Real-time personalization without sending customer data to the cloud

- Autonomous checkout systems processing transactions locally

- Store operations that continue functioning during network outages

Telecommunications

5G networks are fundamentally edge-first architectures, with:

- Multi-access edge computing (MEC) integrated into cell towers

- Ultra-low latency for mobile applications

- Processing happening within the network itself rather than distant data centers

Manufacturing

Industrial environments are deploying edge solutions for:

- Predictive maintenance analyzing sensor data in real-time

- Quality control computer vision at production speed

- Coordinated robotics requiring millisecond response times

- Secure, air-gapped processing of sensitive production data

The Technology Stack Evolution

Edge-Native Technologies

The shift to edge-first architecture is driving innovation across the entire technology stack:

Edge Computing Platforms:

- AWS Wavelength, Azure Edge Zones, Google Distributed Cloud

- Open source projects like KubeEdge and OpenYurt

- Specialized edge orchestration platforms

Edge-Optimized Databases:

- Distributed databases designed for eventual consistency

- Time-series databases for sensor data

- Edge-native caching and synchronization solutions

Lightweight Containers:

- Smaller, more efficient container runtimes for resource-constrained edge devices

- WebAssembly gaining traction for edge workloads

AI at the Edge:

- Model compression techniques to run sophisticated AI on limited hardware

- Federated learning enabling model training across distributed devices

- Edge-specific AI accelerators and neural processing units

Strategic Implications for Organizations

When to Adopt Edge-First Thinking

Not every organization needs to immediately pivot to edge-first architecture. The decision depends on your specific use cases:

Strong Edge-First Candidates:

- Applications requiring sub-100ms latency

- IoT deployments with thousands of connected devices

- Use cases involving real-time video or sensor processing

- Scenarios requiring operation during network disruptions

- Applications processing sensitive data that should stay local

Cloud-First Remains Appropriate:

- Batch processing and analytics workloads

- Applications where latency above 100ms is acceptable

- Workloads requiring massive computational power

- Scenarios where data centralization provides value

The Transition Strategy

Organizations moving toward edge-first architecture should consider:

- Identify Latency-Sensitive Workloads: Audit existing applications to determine which would benefit from edge processing

- Start with Pilot Deployments: Test edge solutions at limited scale before full deployment

- Build Edge Capabilities: Invest in the skills, tools, and partnerships needed for distributed infrastructure

- Design for Distribution: New applications should be architected from the start with edge capabilities in mind

- Plan the Integration: Edge and cloud must work seamlessly together; design the data flows and synchronization strategies carefully

Steps to Implement an Edge-First Strategy

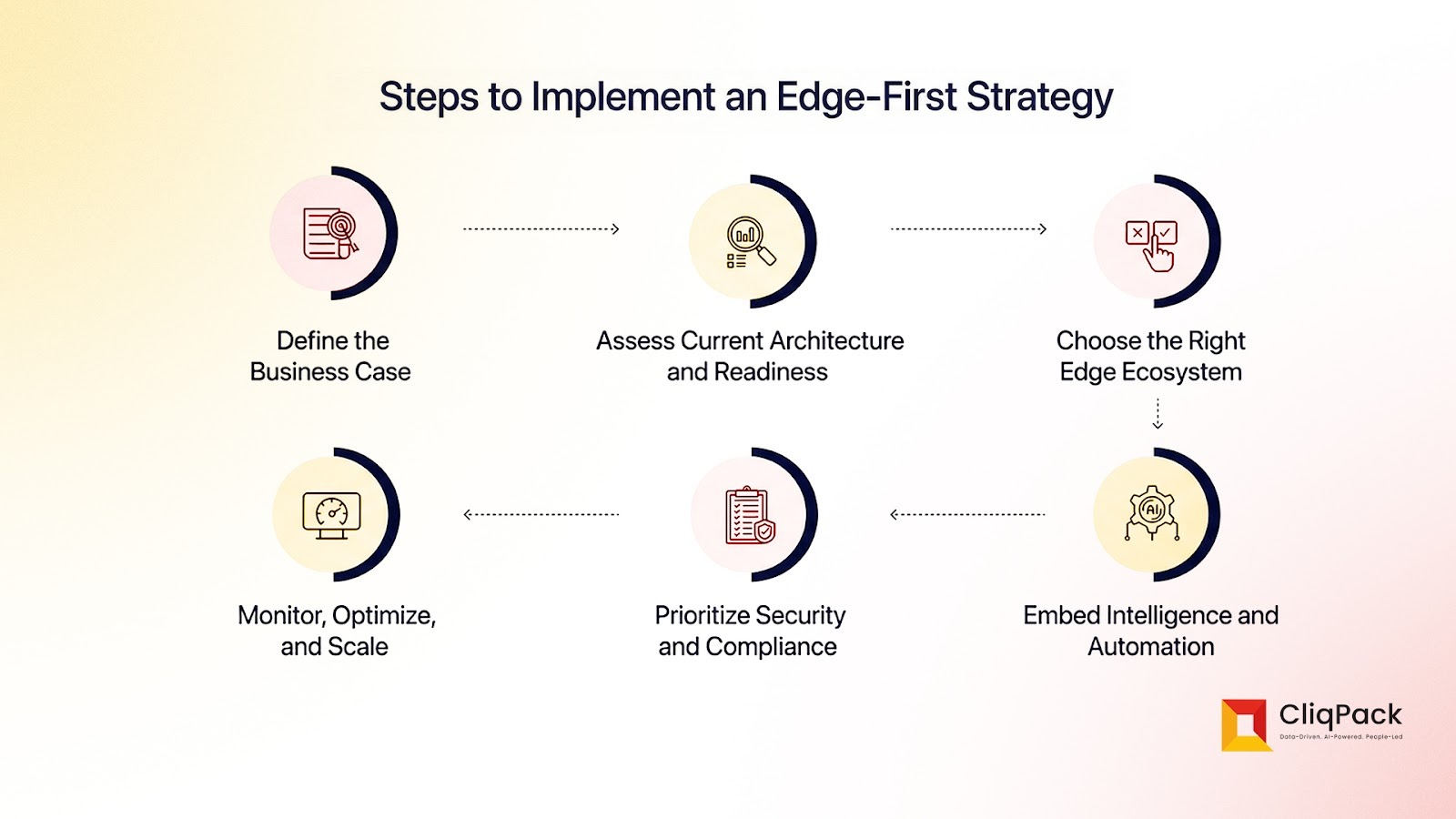

The implementation follows a structured six-step process designed to transition organizations toward edge computing effectively.

- Define Business Case: Identify specific business objectives, use cases, and expected outcomes to justify the edge-first approach. This initial step ensures alignment with organizational goals.

- Assess Current Architecture and Readiness: Evaluate existing infrastructure, identify gaps, and determine readiness for edge integration. This assessment helps mitigate risks during deployment.

- Choose Right Edge Ecosystem: Select appropriate edge platforms, tools, and partners that match technical and operational requirements. Compatibility ensures seamless scalability.

- Prioritize Security and Compliance: Implement robust security measures, encryption, and regulatory compliance from the outset. Protecting data at the edge prevents vulnerabilities in distributed environments.

- Embed Intelligence and Automation: Integrate AI, machine learning, and automation capabilities into edge nodes for real-time decision-making. This enhances efficiency and responsiveness.

- Monitor, Optimize, and Scale: Deploy monitoring tools to track performance, optimize resources, and scale operations as needed. Continuous improvement sustains long-term success.

This cyclical process, as depicted in the diagram, promotes iterative refinement for sustained edge computing adoption.

The Future of Distributed Computing

The shift from cloud-first to edge-first architecture represents one of the most significant infrastructure transformations since the rise of cloud computing itself.

As autonomous systems, industrial automation, interactive AI, and IoT deployments proliferate, the demand for ultra-low latency processing will only increase. Organizations that understand this shift and architect their infrastructure accordingly will have decisive advantages in responsiveness, user experience, and operational efficiency.

The cloud isn’t disappearing—it’s being augmented and complemented by a massive distributed computing layer that brings processing power to the very edge of the network.

The future of infrastructure isn’t centralized or distributed—it’s intelligently distributed, with the right workloads processed in the right locations across a computing continuum from edge to cloud.

The edge-first revolution is here. The question is: Is your infrastructure ready?

What's your organization's approach to edge computing? Are you exploring edge-first architecture, or are you still fully committed to cloud-first strategies? Share your thoughts and experiences in the comments below.